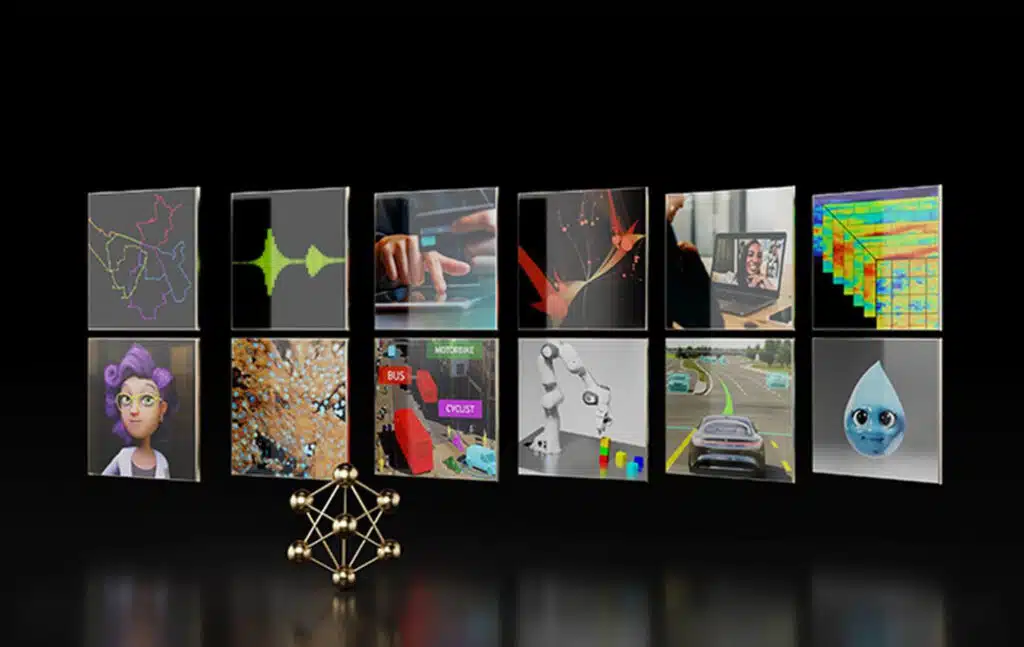

In today’s rapidly evolving technological landscape, artificial intelligence (AI) has become an indispensable tool for businesses across various industries. While cloud-based training platforms offer convenience and scalability, training AI models locally on desktop Workstations presents several compelling advantages, particularly in terms of cost-effectiveness and data security. This article will delve into the benefits of local AI training, explore the role of NVIDIA AI Enterprise and open-source tools, and discuss the advantages of leveraging NVIDIA professional GPUs to accelerate AI workflows.

The Case for Local AI Training

-

Cost-Efficiency:

- Initial Investment: Training an AI model locally often requires less upfront investment compared to cloud-based solutions. Workstations, while powerful, are generally more affordable than cloud servers designed for large-scale training.

- Iterative Development: Local training environments provide a flexible platform for experimentation and iterative development. This allows developers to validate project concepts and refine models before committing to more significant hardware investments.

-

Data Security:

- Privacy Concerns: Training AI models on sensitive data can raise privacy concerns. By keeping all training data local, organisations can minimise the risk of data breaches and ensure compliance with data protection regulations.

- Intellectual Property: Local training helps protect intellectual property by preventing unauthorised access to proprietary data and models.

NVIDIA AI Enterprise: A Comprehensive Solution

NVIDIA AI Enterprise is a comprehensive software suite designed to simplify and accelerate AI development and deployment. It includes a range of tools and frameworks, such as:

- NVIDIA CUDA: A parallel computing platform for GPUs.

- NVIDIA cuDNN: A GPU-accelerated library for deep neural networks.

By leveraging NVIDIA AI Enterprise, organisations can streamline their AI workflows, improve performance, and reduce development time.

Open-Source Tools: Flexibility and Community Support

In addition to commercial solutions like NVIDIA AI Enterprise, open-source tools such as Docker and PyTorch offer flexibility and a vibrant community.

- Docker: A platform for building, shipping, and running applications in containers. Docker can be used to create isolated environments for AI development and deployment, ensuring consistency and reproducibility.

- PyTorch: A popular deep learning framework known for its flexibility and ease of use. PyTorch provides a rich ecosystem of libraries and tools for building and training AI models.

- TensorFlow: A popular open-source machine learning framework.

- PyTorch: Another widely used deep learning framework.

The Power of NVIDIA Professional GPUs

NVIDIA professional GPUs, such as the NVIDIA RTX series, are specifically designed to accelerate AI workloads. These powerful GPUs offer:

- Tensor Cores: Specialized hardware units that can perform matrix operations at high speeds, essential for training deep neural networks.

- High-Bandwidth Memory: Provides fast access to data, improving training performance.

- Driver Support: NVIDIA professional GPUs come with optimised drivers and software tools, ensuring optimal performance and compatibility.

By leveraging NVIDIA professional GPUs, organisations can significantly reduce training time and improve model accuracy.

Conclusion

Training AI models locally on AI Workstations offers a cost-effective and secure approach, particularly for small- to medium-sized projects. By combining the power of NVIDIA AI Enterprise, open-source tools, and NVIDIA professional GPUs, organisations can accelerate AI development and deployment while protecting sensitive data. As AI continues to evolve, local training will remain an important option for businesses seeking to harness the benefits of this transformative technology.